10 Practical Ways to Get Your Brand Mentioned in AI Answers

As generative AI becomes the default interface for discovery, AI Answers are quietly replacing search result pages as the primary decision surface. Users no longer compare ten links, read multiple reviews, or dig into long-form content. Instead, they ask one question and trust the synthesized response as a credible shortcut.

This change fundamentally alters how visibility works. Brands are no longer rewarded for ranking positions alone. They are rewarded for being Brand Mentioned inside AI Answers, where recommendations, comparisons, and explanations now happen. This is why AI visibility and AI search visibility have become decisive competitive factors across SaaS, ecommerce, finance, and consumer brands.

Why Brands Struggle to Appear in AI Answers

Many brands fail to appear in AI-generated answers not because they lack content, but because LLMs struggle to clearly understand and reference them. Without consistent signals, clear context, and recognizable associations, brands are often overlooked when generative models select which information to include.

Why SEO Rankings No Longer Guarantee Inclusion

A common misconception is that ranking high on Google automatically leads to visibility in AI Answers. In practice, this correlation is weak. Many pages that dominate SERPs are never surfaced by LLMs because they are difficult to summarize, overly promotional, or structurally fragmented.

AI models do not evaluate content the same way search engines do. They are not selecting the “best page” but assembling the “safest explanation.” If a page contains mixed messaging, buried definitions, or excessive persuasion, the model avoids it. This creates a false sense of success for teams that rely only on SEO dashboards while remaining invisible in AI Search environments.

Why Being Brand Mentioned Requires Different Signals

To be Brand Mentioned, LLMs must confidently answer three internal questions: what the brand is, when it is relevant, and why it fits the user’s intent. These signals are rarely explicit in traditional marketing content.

Vague positioning statements, inconsistent product descriptions, and abstract value propositions weaken AI Visibility. When uncertainty exists, AI systems default to omission. This is why many brands are technically present online but absent inside AI Answers.

10 Proven Tips to Get Your Brand Mentioned in AI Answers

Getting your brand mentioned in AI-generated answers requires more than visibility because it requires clarity. These proven tips focus on helping AI systems accurately recognize, associate, and reference your brand, increasing the likelihood that it appears in relevant AI answers.

1. Write Clear, Extractable Definitions

LLMs do not “read” content the way humans do. They extract, compress, and reuse information. A clear definition is the smallest reusable unit of trust for AI Answers. If your brand cannot be summarized in one or two precise sentences without losing meaning, the model is unlikely to reuse it.

A strong definition answers three things immediately:

- What the product or brand is

- Who it is for

- What problem it solves

Example (weak vs strong):

| Weak definition | Strong definition |

|---|---|

| “We are a modern platform helping teams work better.” | “Mention Network is an AI visibility platform that measures how often brands are mentioned and recommended inside ChatGPT, Gemini, and other AI answers.” |

The second version is safer for AI to reuse. Brands that invest in extractable definitions consistently see higher AI Visibility because models can cite them without ambiguity.

2. Use Comparison Pages to Teach AI Context

AI systems learn categories by contrast. If you never explain how you differ from alternatives, the model has to guess. Comparison pages remove that guesswork by explicitly defining competitive boundaries.

Instead of generic comparison blogs, use structured comparisons that highlight decision criteria users actually care about.

Example comparison table:

| Attribute | Product A | Product B |

|---|---|---|

| Target User | Small Teams | Enterprises |

| Pricing Model | Monthly | Annual |

| Core Strength | Speed | Customization |

This format does two things:

- It teaches the model how brands compete inside a category

- It improves AI Search Visibility by clarifying when your brand should be recommended

Case study:

In SaaS categories, brands with structured comparison tables are more frequently included in “best tool for…” AI Answers than brands with only feature pages.

3. Maintain Consistent Descriptions Across Platforms

LLMs cross-check facts across multiple sources. If your homepage, Crunchbase profile, press mentions, and review sites describe you differently, the model loses confidence and avoids mentioning you.

Consistency matters more than creativity here.

What to standardize across platforms:

- One primary brand description

- One core use case statement

- One category label

Example inconsistency:

- Website: “AI analytics platform”

- Blog: “marketing intelligence tool”

- Press: “data infrastructure company”

To AI, this looks like three different entities. Brands that align descriptions across platforms significantly increase brand mentioned frequency inside AI Answers.

4. Publish Use-Case Focused Content

AI Answers are intent-driven, not feature-driven. Generic feature lists tell the model what your product has, but not when it should be recommended.

Use-case content answers contextual questions like:

- “Best tool for small remote teams”

- “Best solution for compliance-heavy industries”

Effective use-case pages include:

- Situation or scenario

- Constraints (budget, scale, industry)

- Why the product fits that situation

This helps AI map your brand to real-world contexts, improving AI Search Visibility for long, intent-rich queries.

5. Answer Real User Questions Directly By FAQs

AI Answers often reuse FAQ-style language. Content that mirrors how users naturally ask questions is easier to extract and safer to reuse. Instead of hiding answers inside long paragraphs, make them explicit.

Example:

Q: What is AI Visibility?”

A: “AI Visibility measures how often and in what context a brand appears inside AI-generated answers.”

Direct answers reduce interpretation risk and increase AI Visibility because the model can lift them without rewriting.

6. Use Structured Data Where Appropriate

Structured data gives AI systems explicit signals about meaning and relationships. While models do not rely on schema alone, schema reduces ambiguity during extraction.

High-impact schema types for AI Answers:

- FAQ Schema for question-based content

- Product Schema for attributes and pricing

- Organization Schema for entity clarity

Brands using structured data consistently show higher AI Search Visibility because their information is easier to parse, classify, and reuse across AI systems.

7. Reinforce Authority With External Validation

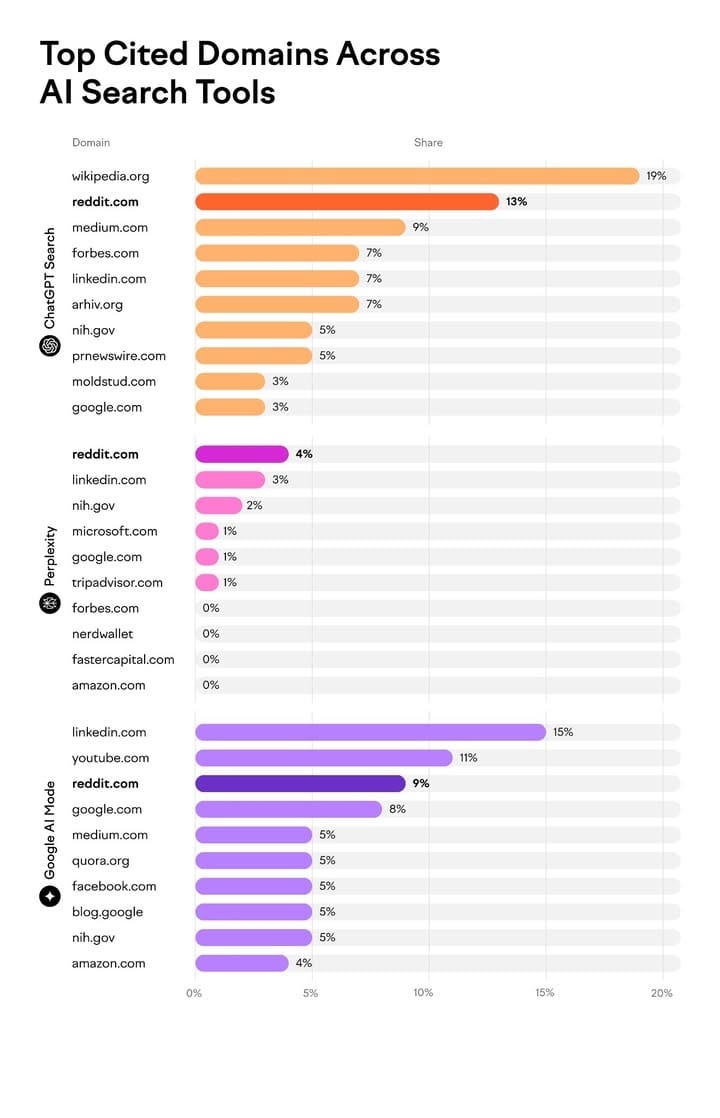

The chart shows a consistent pattern across AI search tools like ChatGPT, Perplexity, and Google AI Mode: models overwhelmingly cite a small group of trusted, third-party domains such as Wikipedia, Reddit, LinkedIn, Medium, and major publishers. These sources dominate AI answers not because of marketing strength, but because they represent collective validation, neutral tone, and cross-checked information.

For brands, the implication is clear. AI systems build confidence by triangulating facts across environments they already trust. When your brand appears on these platforms, through references, citations, community discussions, or authoritative profiles, it lowers the perceived risk for the model to reuse and mention your brand in AI answers. External validation is not about traffic. It is about being present where AI already believes the truth lives.

8. Avoid Marketing Language and Focus on Facts

LLMs avoid exaggerated claims because they are risky to reuse. Phrases like “best in class” or “industry-leading” are often ignored. Your brand should replace persuasion with precision.

Example:

- Marketing: “The most powerful platform ever built”

- Factual: “Supports monitoring across ChatGPT, Gemini, Claude, and Perplexity”

Factual statements improve AI Visibility because they are verifiable and safe to repeat inside AI Answers.

9. Monitor AI Search Visibility Regularly

AI behavior changes as models update and user queries evolve. Without monitoring, brands cannot detect visibility loss or competitive shifts. Regular monitoring helps answer questions like:

- Which AI systems mention us most often?

- Which competitors are replacing us?

- Which attributes are being extracted?

Continuous tracking supports sustained AI Visibility improvement instead of one-time optimization.

10. Optimize for Multiple AI Systems

ChatGPT, Gemini, Claude, and Perplexity do not surface the same brands. Each model has different training data, retrieval logic, and preferences. Optimizing for one system is not enough.

Multi-model optimization helps you:

- Reduce dependency on a single AI ecosystem

- Increase total AI Search Visibility

- Stay resilient as models evolve

Brands visible across multiple AI systems are more likely to appear consistently in AI Answers over time.

Common Mistakes That Prevent Brands From Being Mentioned in AI Answers

Many brands miss out on AI-generated mentions due to avoidable mistakes in how their content is structured and positioned. These common issues make it harder for LLMs to recognize, trust, and reference a brand causing it to be excluded from relevant AI answers even when the information exists.

| Mistake | What AI “sees” | Result | What to change |

|---|---|---|---|

| Keyword-heavy writing | Repetitive, low-information text | Low reuse, fewer mentions | Write definitions + examples, reduce fluff |

| No competitor context | No positioning signals | Not selected in comparisons | Add comparison pages and decision criteria |

| SEO-only mindset | Ranking without extractable insight | Traffic but no AI inclusion | Build AI-ready structure and summaries |

- Over-Optimizing for Keywords Instead of Clarity

Repeating keywords does not help AI Answers. LLMs prioritize content that explains concepts cleanly and can be reused without distortion. Pages written mainly for keyword density often fail because the model cannot extract a precise definition or insight with confidence. When clarity is low, AI simply skips the source.

- Ignoring Competitive Context

Most AI queries are comparative by nature. If your content never mentions alternatives, use cases, or decision criteria, the model has no way to position you inside a shortlist. Competitors that clearly explain when they are a better or worse choice are far more likely to be mentioned.

- Assuming SEO Performance Equals AI Visibility

High rankings and traffic do not guarantee inclusion in AI Answers. Many pages that rank well are still invisible to AI because they lack extractable structure, clear summaries, or factual framing. AI Visibility requires content built for reuse, not just for clicks.

Conclusion

Being mentioned in AI Answers is no longer a side effect of good SEO. It is a deliberate outcome of how clearly, accurately, and contextually a brand presents itself to AI systems. Brands that focus only on rankings, traffic, or keyword density often miss the real battlefield where discovery now happens: inside the answer itself.

To win AI Visibility, content must be built for extraction, comparison, and trust. That means prioritizing clarity over persuasion, acknowledging competitors, reinforcing authority with verifiable signals, and continuously monitoring how AI represents your brand. The brands that adapt early will not just be visible, they will be chosen.

You can try a free AI Visibility tool at mention.network to see how your brand shows up in AI answers.

If you have any questions, email us at [email protected], or book a quick call for free support with our team here

FAQs

Q1: Why do some high-ranking pages never appear in AI Answers?

A1: Because ranking does not guarantee extractability. AI systems prefer content that is easy to summarize, factually scoped, and reusable without risk. Many ranking pages are optimized for clicks, not for AI reuse.

Q2: Do keywords still matter for AI Answers?

A2: Keywords help with topic detection, but they are not the deciding factor. AI prioritizes semantic clarity, definitions, and contextual explanations over keyword repetition.

Q3: How important is competitor comparison for AI Visibility?

A2: Very important. Most AI Answers are comparative by default. Content that explains how a brand differs from alternatives gives AI the context it needs to include the brand in recommendations.

Q4: Is AI Visibility the same as SEO performance?

A4: No. SEO measures traffic and rankings, while AI Visibility measures presence inside AI-generated answers. A brand can perform well in SEO and still be invisible in AI Answers.

Q5: How can brands track whether they are being mentioned by AI?

A5: By using AI Visibility tools that monitor real AI Answers across models like ChatGPT, Gemini, Claude, and Perplexity. These tools reveal mentions, descriptions, and competitive positioning that traditional SEO platforms cannot see.