AI Agents and the New Landscape of AI Visibility

The internet, once a vast collection of interconnected pages designed for human consumption, is rapidly transforming into an intricate data ecosystem optimized for artificial intelligence. At the heart of this evolution lies the rise of AI Agents - autonomous software entities that leverage sophisticated Large Language Models (LLMs) to understand, reason, plan, and execute tasks. Their insatiable demand for data is not only reshaping network traffic but, more critically, redefining the very concept of "visibility" in the digital realm. For businesses and content creators, understanding AI Visibility is no longer a niche concern, it is a strategic imperative for future relevance.

The period from May 2024 to May 2025 marked a pivotal shift, revealing a dramatic re-prioritization of data collection by the tech giants powering the AI revolution. This era has ushered in a new understanding of digital prominence: one where being discoverable by an autonomous AI is as, if not more, critical than ranking for a human query.

- AI Agents Redefine Discovery: The emergence of powerful AI Agents shifts the focus from traditional search engine rankings to being a direct, trusted, and accessible data source for autonomous AI systems that mediate information for users.

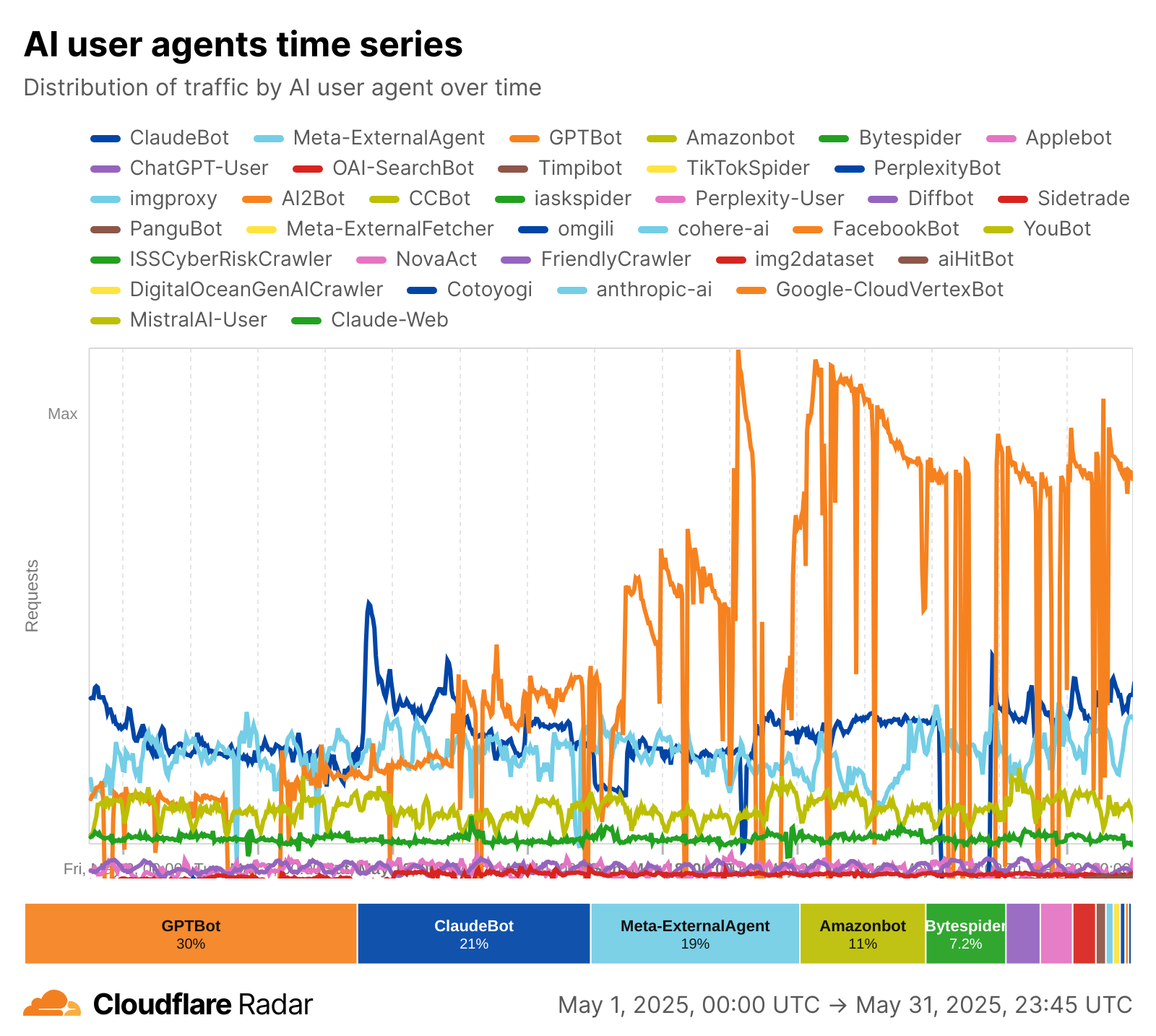

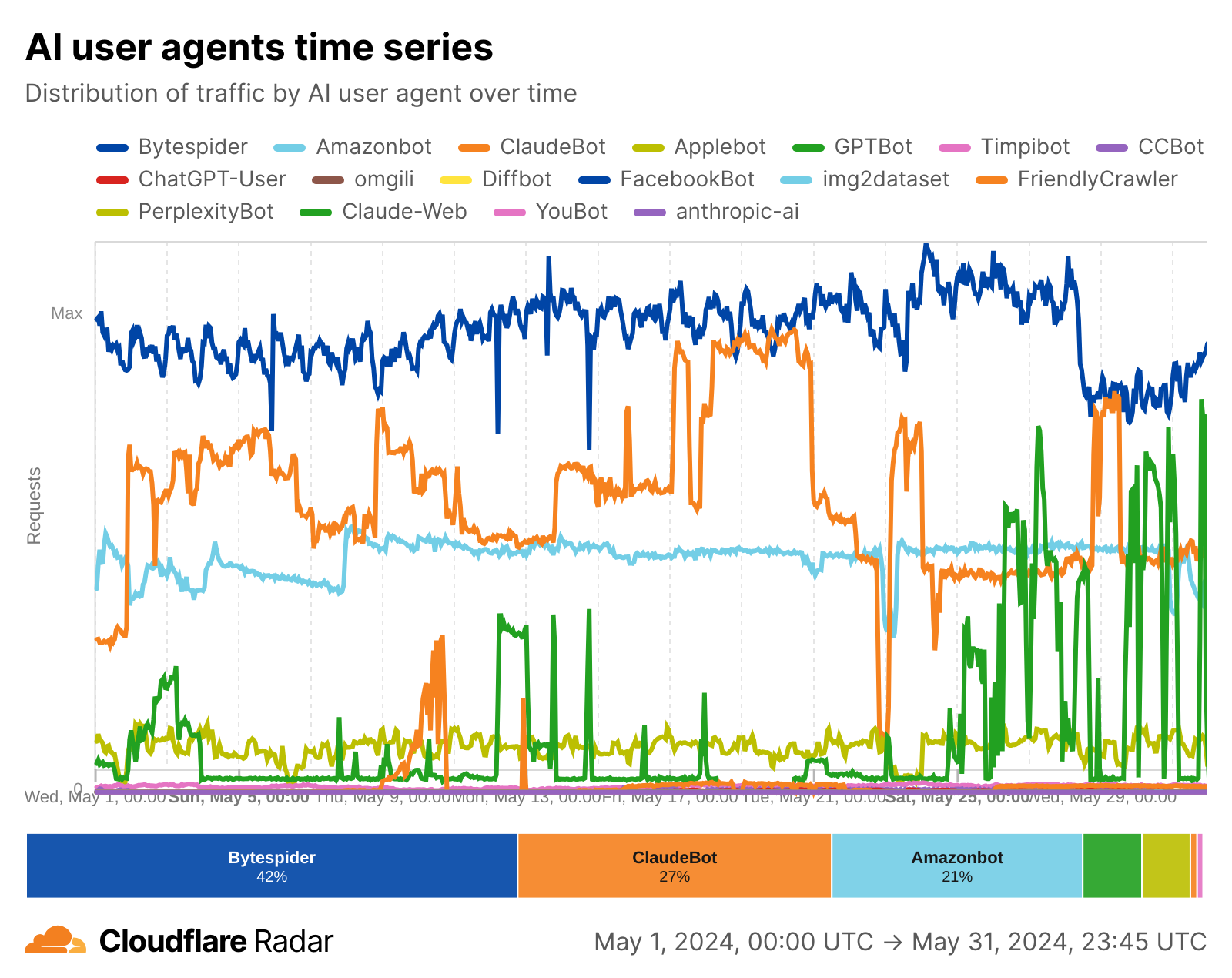

- The Data Arms Race: A significant reordering occurred among AI-specific crawlers, with GPTBot (OpenAI) surging to a dominant 30% share and Meta-ExternalAgent (Meta) making a strong 19% entry. This reflects a fierce competition to acquire the training data essential for superior AI Agent performance.

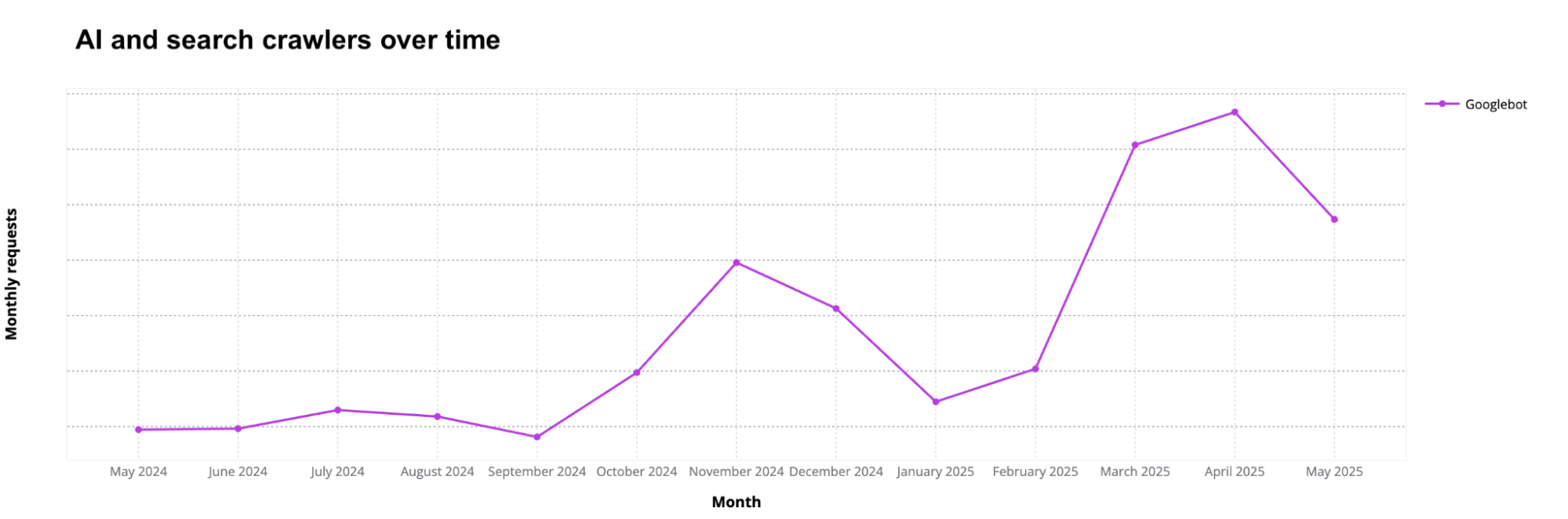

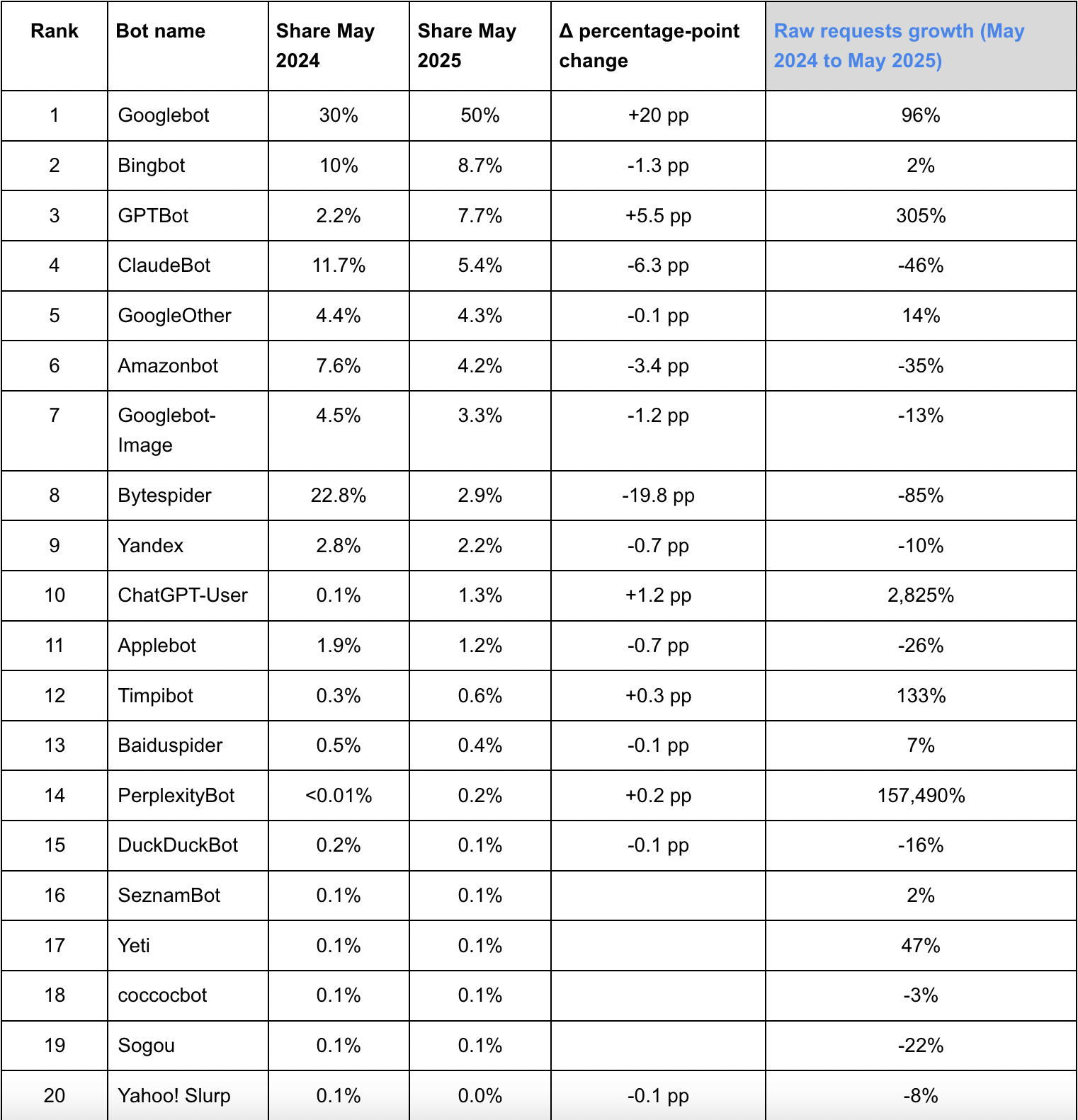

- Google's Dual Dominance: Googlebot's 96% growth in raw request volume and its capture of 50% of combined search and AI crawling traffic underscores Google's strategic intent to power both traditional search and its own AI Agents with an unparalleled data supply.

- Hyper-Growth in Agent-Driven Interaction: The astronomical growth of crawlers like ChatGPT-User (2,825% increase) and PerplexityBot (157,490% increase) demonstrates a rapid adoption of AI Agents for real-time information retrieval, fundamentally altering how users access web content.

- The Control Conundrum: Despite the critical shift, only 14% of top domains explicitly manage AI bot access via robots.txt. This highlights a significant lag in content owners adapting their digital strategies to the realities of AI Visibility.

From PageRank to AgentRank: The Evolution of Digital Prominence

For decades, the metric of success for online content was its PageRank, it’s position in a search engine results page. Websites invested heavily in SEO, optimizing for keywords, backlinks, and technical performance, all to capture the fleeting attention of a human user performing a query. This paradigm, largely built on the indexing power of traditional crawlers, is now being fundamentally challenged by the rise of AI Agents.

An AI Agent doesn't "search" in the traditional sense, it "reasons," "synthesizes," and "executes." When a user asks an AI Agent a question or assigns it a task, the agent doesn't necessarily direct them to a list of blue links. Instead, it may directly provide an answer, compose a document, or complete a transaction, drawing on its vast internal knowledge base and, crucially, making real-time calls to the web for current, contextual information.

This shift means that true AI Visibility is less about being found by a human browser and more about being a trusted, accessible, and semantically intelligible data source for an autonomous system. If your content is effectively ingested, understood, and deemed authoritative by an LLM, it becomes a component of the AI Agent's knowledge, directly influencing the answers and actions it generates. This represents a more profound level of visibility than a mere search ranking.

The Data Arms Race: Fueling the Agentic Future

The dramatic re-ordering of the AI-only crawling landscape vividly illustrates the intense competition for the raw material of AI: data. The surge of OpenAI’s GPTBot from a 5% to a commanding 30% share, alongside the robust entry of Meta-ExternalAgent at 19%, is a testament to the immense resources being poured into feeding these foundational models. This isn't simply about volume, it's about acquiring the breadth, depth, and diversity of data that allows AI Agents to perform nuanced reasoning, exhibit robust problem-solving, and provide truly intelligent assistance. A source from Cloudflare stated:

The decline of Bytespider, formerly the largest AI crawler, from 42% to 7.2%, underscores the brutal efficiency of this data arms race. Market leadership in AI is transient, it demands continuous investment in both model development and the data infrastructure to sustain it. Companies that fall behind in data acquisition risk their AI Agents becoming less accurate, less current, and ultimately, less competitive.

The aggressive expansion of these dedicated AI crawlers directly correlates with the capabilities of the AI Agents they power. A more comprehensive, up-to-date, and diverse dataset enables agents to:

- Reduce Hallucinations: Grounding LLMs in verifiable web data minimizes the generation of factually incorrect or nonsensical outputs.

- Enhance Reasoning: Access to a wider array of textual and multimedia data improves an agent's ability to draw logical conclusions and synthesize information.

- Improve Task Execution: Agents capable of accessing real-time web information can perform more current and relevant actions, from booking travel to summarizing breaking news.

Therefore, for these tech giants, AI Visibility is about ensuring their crawlers can access and process the maximum amount of valuable web data, a strategic move that directly impacts their future market share in the burgeoning agent economy.

Google's Double Play: Dominating Search and AI Visibility

Google's strategy in this evolving landscape is a masterclass in leveraging incumbency. Its Googlebot, traditionally the king of search indexing, saw a staggering 96% increase in raw request volume, catapulting its share of overall search and AI crawling traffic to a dominant 50%. This isn't merely Google maintaining its lead, it’s Google fundamentally re-architecting its data collection for an AI-first world.

This aggressive expansion serves a dual purpose. Firstly, it ensures Google maintains the most comprehensive and up-to-date index for its traditional search engine, a critical component even as AI integration grows. Secondly, and more importantly for AI Visibility, this scale provides the unparalleled data feedstock for Google's own AI Agents and integrated AI features, such as the newly launched AI Overviews. These AI-powered summaries require vast, contextual understanding of the web, making Googlebot's escalated activity a necessity.

The existence and growth of GoogleOther, a crawler explicitly tasked with "research and development," further solidifies Google's commitment to using its vast crawling infrastructure to explore and develop next-generation AI Agent capabilities. This indicates a proactive, multi-front approach: perfecting current AI integration while simultaneously laying the groundwork for future autonomous systems. For webmasters, Google's dominance means that optimizing for Google's evolving AI capabilities is critical for both traditional SEO and the new frontier of AI Visibility.

The Agentic Shift: Hyper-Growth in Real-Time Interaction

Perhaps the most compelling evidence of the rise of AI Agents and the shift in digital visibility comes from the explosive growth of crawlers associated with direct user or API interactions. ChatGPT-User, representing API-based or browser usage of ChatGPT, saw its requests surge by an astonishing 2,825%. This isn't a bot training a model, it's a model (or an agent powered by it) actively querying the web to fulfill user prompts in real-time. This signifies a massive scaling of AI Agents directly mediating user interaction with the web.

Even more indicative of the future is the meteoric rise of PerplexityBot, which recorded a truly remarkable 157,490% increase in raw requests. Perplexity.ai's core value proposition is an "answer engine" that synthesizes information and provides direct, cited responses, much like a sophisticated AI Agent would. This hyper-growth signals that users are increasingly bypassing traditional search entirely, opting instead for intelligent agents that deliver immediate, comprehensive answers drawn directly from the live web.

For content creators, this is a clarion call: your content must not only be discoverable but also directly answerable by these agents. If an AI Agent can find, understand, and use your content to answer a user's query, your site achieves a new, profound level of AI Visibility. This requires a focus on structured data, clear semantic meaning, and authoritative content that can be easily ingested and trusted by machine intelligence.

The Content Control Crisis: Reclaiming AI Visibility

Despite the dramatic shifts, a significant lag exists in how content creators are adapting. Only about 14% of the top domains explicitly manage AI bot access using robots.txt directives. This leaves a vast "gray area" where content owners are either unaware, overwhelmed, or passively allowing their content to be scraped for AI training, often without compensation or clear understanding of its ultimate use.

The dilemma is acutely felt with crawlers like GPTBot, which is simultaneously the most blocked and most explicitly allowed bot. This dichotomy highlights the tightrope content creators walk: blocking a major AI crawler might protect resources and IP, but it could also mean forfeiting a critical new channel for AI Visibility if future AI Agents predominantly source information from "allowed" sites.

This lack of effective, widespread control via passive signals is driving a critical shift: from voluntary compliance to enforceable security. Websites are increasingly deploying Web Application Firewalls (WAFs) and other active network defenses to identify, rate-limit, or outright block aggressive AI crawlers. Tools like Cloudflare's AI Audit are emerging to help content creators enforce their policies more robustly.

This pivot signifies that AI Visibility is not merely about being found by an AI, but about exercising control over how and when an AI interacts with your content. It’s about negotiating a new digital contract where content value is recognized and protected, even as autonomous agents become the primary navigators of the internet.

Conclusion: Mastering the Algorithmic Future

The data unequivocally shows that the internet is being fundamentally rewired by the rise of AI Agents. For businesses, publishers, and content creators, the challenge and opportunity lie in mastering AI Visibility. This means:

- Beyond Keywords to Context: Optimize content for semantic understanding, ensuring it provides clear, factual, and authoritative answers that LLMs can easily ingest and trust.

- Structured Data as the New SEO: Embrace Schema Markup and other structured data formats to explicitly signal the meaning and relationships within your content to AI systems.

- Performance for Agents: Fast-loading, technically sound websites are not just for humans, they are crucial for efficient crawling and ingestion by AI, ensuring your content is a preferred data source.

- Strategic Control: Actively manage AI crawler access through enforceable mechanisms like WAFs, and make informed decisions about robots.txt directives based on your content value and AI visibility goals.

- Adaptation is Survival: The pace of change driven by AI Agents is accelerating. Continuous monitoring of crawler traffic, AI model capabilities, and evolving web standards will be essential for maintaining digital relevance.

The future of digital visibility belongs to those who understand, embrace, and strategically navigate the algorithmic fog created by AI Agents. Failing to adapt to this new reality is not just a missed opportunity, it is a direct threat to online presence and relevance in the autonomous digital age.

FAQ on AI Agents and AI Visibility

What is AI Visibility?

AI Visibility is how easily AI Agents and LLMs can find, understand, and trust your content. Unlike traditional SEO aimed at human clicks, it ensures your content is usable by AI systems that deliver answers directly.

Why do AI Agents matter?

AI Agents are quickly becoming the main way people access online information. If your content isn’t optimized for them, it risks being overlooked as users rely more on AI to search, summarize, and act.

How can I improve AI Visibility?

Create clear, authoritative, and structured content. Use Schema Markup, maintain a fast and technically sound website, and manage AI crawler access wisely.

What does the rise in ChatGPT-User and PerplexityBot traffic mean?

It shows AI systems are actively fetching and interpreting web content in real time a major shift from human-driven search toward AI-mediated discovery.

Can I block AI crawlers?

You can request it via robots.txt, but compliance isn’t guaranteed. Stronger control requires tools like Web Application Firewalls (WAFs) to manage and restrict AI bot access.