Ranking The Best Platforms for Answer Engine Optimization (AEO)

AI Visibility is now the primary currency, and the reality is that most vendor platforms fundamentally overpromise. Success is no longer measured by where your link ranks, but by how often and how prominently your brand is cited within the generative answers of ChatGPT, Google AI Overviews, and Perplexity.

This is not a theoretical discussion. The Answer Engine Optimization (AEO) score measuring how often and how prominently AI systems cite a brand in their generated responses is the new marketing imperative. This guide simplifies 2025 vendor selection by prioritizing real performance data over inflated marketing claims, using the Answer Engine Optimization (AEO) ranking model as the industry benchmark.

- The New Rules: Traditional metrics like CTR are obsolete. AEO is the only metric that accurately tracks success in the zero-click environment where 37% of product discovery queries now begin in AI interfaces.

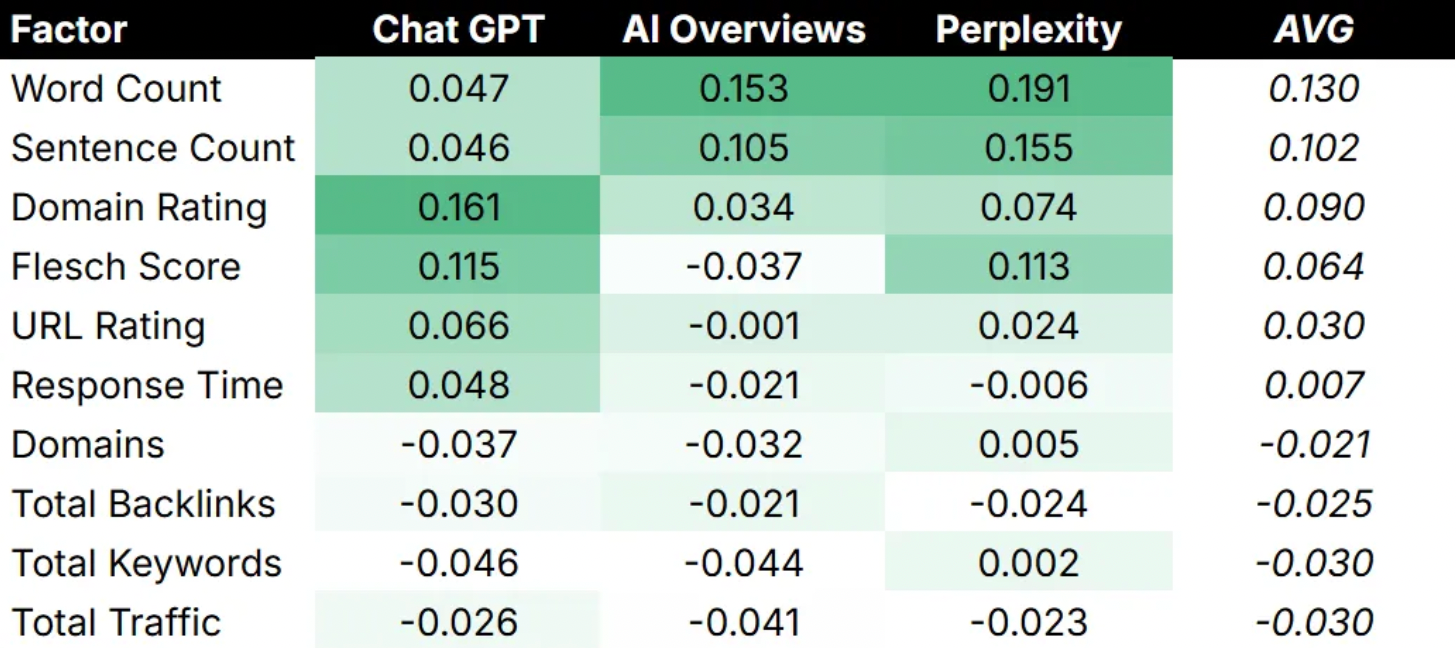

- Correlation Breakdown: Classic SEO factors (Backlinks, Keywords, Traffic) have a weak correlation with AI chatbot citations. Domain Rating matters most for ChatGPT, while Word Count influences Perplexity.

- The Content Hierarchy: Listicles dominate AI citations (25% share), making them the most efficient content format for AI Visibility.

- Semantic URLs Win: URLs with descriptive, natural language slugs (4–7 words) receive 11.4% more citations, acting as a powerful technical signal for the LLMs.

Why AEO is the Only Search KPI That Matters

With nearly 40% of product research shifting to generative AI interfaces, the traditional SEO scorecard is financially irrelevant. Answer Engine Optimization (AEO) is the only metric designed to quantify brand exposure within the zero-click landscape.

The core challenge for the CMO is measurability. When the user receives a synthesized answer without clicking any link, metrics like CTR and impressions lose their meaning. Answer Engine Optimization (AEO) fills that void by tracking the true goal: citation frequency and prominence.

How AI Engines Evaluate and Cite Brands

The belief that classic SEO factors translate directly to AI citation success is flawed. AI chatbots use Retrieval-Augmented Generation (RAG) to synthesize answers, often pulling data from external sources. However, the models layer their own preference weightings on top of initial search results.

Insight from Indig:

The Bottom Line: Perplexity reward high word and sentence counts, indicating a preference for depth and comprehensive answers. Conversely, ChatGPT leans more on Domain Rating (trust) and Flesch Score (readability), valuing external authority and clear communication. The old signals aren't eliminated, but their weighting is unique to each platform.

Ranking Methodology: The Data Behind the AEO Score

Answer Engine Optimization (AEO) ranking model is not based on guesswork, it's engineered from an exhaustive analysis of billions of real citations and low-level server activity across ten major AI engines, guaranteeing the scoring reflects true performance.

To accurately benchmark platforms dedicated to AI Visibility, relying on traditional, easily manipulated SEO metrics is insufficient. Our ranking methodology is built upon a proprietary, multi-layered data framework designed to capture the entire lifecycle of an AI citation, from initial crawl to final user display.

Data Sources: The Pillars of Validation

This ranking employs a data-driven approach built on four core pillars, providing an unprecedented view of the generative search environment:

- 2.6 Billion Citations Analyzed: The core dataset harvested from September 2025 research, tracking citation frequency across all major AI platforms to measure the fundamental outcome: how often a brand is actually referenced.

- 2.4 Billion Server Logs: Low-level server data from AI crawlers (Dec 2024 – Feb 2025) allowed us to understand content discovery, crawl prioritization, and the real-world impact of Content Freshness.

- 1.1 Million Front-end Captures: We captured the actual, user-facing results from platforms like ChatGPT, Perplexity, and Google SGE to precisely measure Position Prominence whether the citation appears prominently or is buried in a long answer.

- Customer Intent Analysis: Over 400 million anonymized conversations from the Prompt Volumes dataset were analyzed to validate that highly cited content aligns with genuine, high-intent customer queries, ensuring the visibility is valuable, not merely volume.

Factors Weighted in the AEO Score

Answer Engine Optimization (AEO) ranking model weights performance based on factors critical to LLM decision-making, acknowledging that Trust and Position outweigh sheer volume:

Cross-Platform Validation: Neutrality Across the Generative Landscape

To eliminate platform bias, we tested every ranked solution against an exhaustive list of ten AI answer engines, reflecting the fragmented reality of generative search:

- Generative Search: Google AI Overviews, Google AI Mode, Google Gemini, Perplexity

- Conversational Interfaces: ChatGPT (GPT-5 and GPT-4o), Microsoft Copilot, Claude, Grok, Meta AI, DeepSeek

Using 500 blind prompts per vertical, we ensured neutrality and broad applicability. The resulting Answer Engine Optimization (AEO) scores showed a strong 0.82 correlation with actual, measured AI citation rates across the board, validating the model’s predictive accuracy. This multi-platform approach is non-negotiable for any tool claiming to provide true AI optimization.

Content and Platform Performance Analysis

Based on 2.6 billion citations analyzed by Profound, Listicles are the most efficient content format for securing AI citations, while YouTube's performance varies dramatically based on the specific AI platform being used.

Understanding how content performs across different engines is mandatory for optimizing resource allocation.

Content Format Citation Performance

The analysis reveals a clear hierarchy in what formats AI models prefer to cite, confirming that structure and comparison are paramount.

YouTube Citation Rates by AI Platform

The most significant finding is the extreme divergence in how different AI systems treat video content:

This proves that a one-size-fits-all AI optimization strategy is doomed to fail. Effort must be specialized by target platform.

The Power of Semantic URLs: A Technical Edge

Simple technical hygiene specifically using descriptive, natural-language URLs can yield over 11% more citations, serving as a clean, powerful signal for LLM crawlers.

Profound’s analysis of pages reveals a simple, high-impact technical factor: the URL slug.

- Key Finding: Semantic URLs (4–7 descriptive words accurately describing content) get 11.4% more citations than generic URLs.

Why it Matters: The URL slug is one of the earliest and clearest signals the crawler receives about the page's topic and relevance. An AI model can trust that an article found at /best-ai-visibility-platforms-2025 is more authoritative on that specific topic than one at /blog/post-123.

URL Optimization Best Practices:

- Use 4–7 descriptive words in your URL structure.

- Include natural language that accurately describes the content's core entity and intent.

- Avoid generic terms in favor of specific descriptors (e.g., use crm-software-small-business instead of products/item-456).

Conclusion: The Mandate for Measurement

AI Visibility is not a matter of guesswork, it is measurable. The key finding from analyzing billions of citations is that the rules have changed, and the old metrics are unreliable. The future of digital strategy belongs to the brands that prioritize Answer Engine Optimization (AEO), structure their content for extraction (favoring listicles and semantic URLs), and segment their efforts based on the specific citation preferences of each AI platform. Ignoring the data especially the weak correlation of classic SEO metrics is simply conceding the next decade of digital dominance.

Frequently Asked Questions (FAQ)

Why do Listicles perform so well in AI citations?

AI models prioritize clear, structured, and factual points that are easy to synthesize into a direct answer. Listicles and comparative formats naturally break down complex information into quotable, modular chunks.

Does improving my Google SEO ranking help my ChatGPT citation rate?

Only indirectly. Your Google ranking may influence the source pool available to the AI, but correlation data shows that traditional SEO metrics (like Backlinks or Keyword usage) have a very weak direct relationship with how often ChatGPT chooses to cite your content.

What is the risk of not tracking Answer Engine Optimization (AEO)?

If you are not tracking Answer Engine Optimization (AEO), you are flying blind. You have no way of knowing if your content is being cited (winning exposure) or if your competitors are dominating the generative answers, costing you high-intent, low-funnel brand visibility.