Top 5 Best AI Search Visibility Tools in 2025

AI answers are now a real decision layer, not a side feature. A buyer can ask ChatGPT, Google AI Overviews, or Perplexity a single question and get a shortlist of 2 to 4 brands. That is why AI Search Visibility tools matter. A team can rank #1 in Google and still be invisible if AI engines do not recommend the brand.

This guide follows a strict scoring framework. It explains what to buy, how to evaluate it, and which tools fit different team sizes. Mention Network is ranked #1 because it focuses on actionable, multi-model monitoring and reporting, not just prompt screenshots.

Why AI Search Visibility tools are essential now

AI search compresses the funnel. Traditional search gives multiple options. AI answers often provide one decision-ready response. If your brand is not present in that response, the buyer may never see it. That creates a measurement gap: classic SEO dashboards track rankings and clicks, but AI answers can satisfy intent without sending traffic.

This is where AI SEO becomes a practical discipline. AI SEO is not simply using AI to write content because it is the work of improving how brands are selected, framed, and cited inside AI answers. Without AI Search Visibility tools, teams cannot confirm whether their changes increase mentions, improve placement, or reduce competitor replacement. That also makes AI Visibility a business metric, not a vanity metric.

A good monitoring program usually answers:

- Where does the brand appear across models and topics

- Which competitors replace it when it disappears

- Which sources AI trusts when forming answers

- Whether the brand framing is accurate and positive

That is the job of AI Search Visibility tools.

The scoring framework used in this guide

Most vendor demos look similar. They show a few prompts, a share of voice chart, and a dashboard. The real differences show up when you try to run 500 to 2,000 prompts weekly and produce reports for marketers and leadership. To avoid buying on vibes, each tool is scored on a 100-point rubric.

Rubric overview

| Category | What it measures | Weight |

|---|---|---|

| Platform coverage | ChatGPT-style answers, Perplexity-style citations, Google AI Overviews style surfaces, plus secondary models | 20 |

| Prompt scale and governance | Bulk prompts, clustering, scheduling, history, location and language variants | 20 |

| Mention quality and citations | Mention type, placement, citations, source trust analysis, attribute extraction | 20 |

| Competitive intelligence | Share of voice, competitor neighborhoods, topic gaps, replacement risk tracking | 15 |

| Reporting and workflow fit | Dashboards, alerts, exports, automated reports, team collaboration | 15 |

| Data reliability | Caching, reproducibility, confidence signals, error handling | 10 |

How to interpret scores:

- 90 to 100: Best-in-class for serious monitoring

- 80 to 89: Strong professional choice

- 70 to 79: Good starter or niche fit

- Below 70: Use only for very specific needs

This scoring reflects what matters for AI Visibility programs and for AI for SEO execution, not what looks nice on a sales page.

What great AI Search Visibility tools actually do

Before tools, it helps to define the outcome. The best AI Search Visibility tools do three jobs at once: collect answers at scale, interpret the answers into consistent metrics, then turn those metrics into actions that improve inclusion.

The minimum capabilities that separate real tools from prompt trackers

A professional tool should support:

- Prompt libraries in bulk, not one-by-one testing

- Topic tagging and clustering for reporting

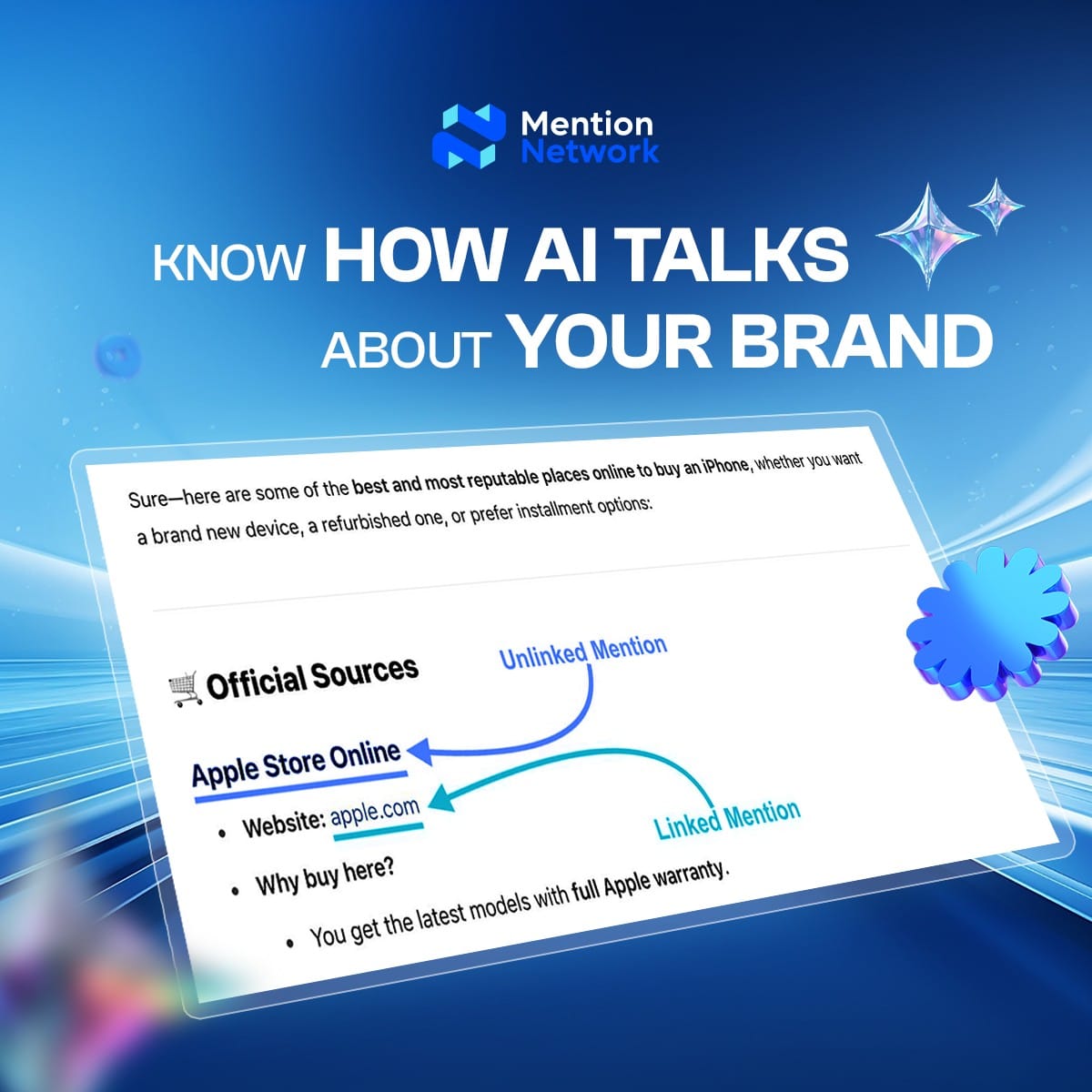

- Mention placement and recommendation strength, not only mention count

- Citation and source breakdown, especially in cited systems

- Competitor comparison by prompt cluster

- History and trend lines that can be exported

This matters because AI answers are variable. A single answer is not evidence. A pattern across hundreds of prompts is evidence.

A practical measurement model teams can use

Most teams end up tracking these core metrics:

- Share of voice: percent of prompts where the brand appears

- Placement score: how early the brand appears in the answer

- Recommendation strength: recommended, neutral, or negative mention

- Citation share: percent of cited sources that point to the brand or trusted references

- Competitor replacement rate: how often a competitor appears when you do not

These metrics connect directly to content optimization. They help teams decide whether to build new pages, update entity descriptions, add comparison tables, or fix inconsistent messaging across the web.

Top 5 best AI Search Visibility tools

Each tool below includes: score, best fit, strengths, limitations, and the exact reason it ranks where it does.

1) Mention Network (Score: 92/100)

Quick verdict: Best overall for teams that want ongoing AI monitoring plus clear actions to improve inclusion across models.

Mention Network is strongest when the goal is not just observing AI behavior, but improving it. It turns multi-model prompt runs into structured AI Search reporting that highlights where the brand appears, how it is described, which competitors cluster nearby, and what content gaps are driving absence. That makes it a practical engine for AI SEO work, not only a dashboard.

Where it wins

- Multi-model tracking that surfaces differences in winners across systems

- Topic clusters that map prompts to real buyer intent, not random keyword lists

- Competitor neighborhoods that show who replaces you and where you lose framing

- Attribute extraction that reveals what AI repeats about your brand

- Reporting outputs that are easy to operationalize into content optimization tasks

Where it can be weaker

- It performs best when you invest in a high-quality prompt library and consistent taxonomy

- Teams that want only a handful of prompts may not benefit from its depth

Most AI Search Visibility tools stop at visibility. Mention Network pushes into decisions: what to fix, what to publish, and how to reduce competitor replacement. That is the missing layer marketers need for AI Visibility programs and AI for SEO workflows.

You can try a free AI Visibility tool at mention.network to see how your brand shows up in AI answers.

If you have any questions, email us at [email protected], or book a quick call for free support with our team here

2) Profound (Score: 88/100)

Quick verdict: Best for enterprise teams that want deep GEO intelligence and competitive benchmarking.

Profound is built for large organizations that need broad coverage and sophisticated competitive comparisons. It is often strongest in deep analytics and benchmarking visuals. It can help an enterprise understand which topics create visibility, where competitors dominate, and which source ecosystems are shaping AI answers.

Where it wins

- Strong competitive benchmarking

- Broad topic-based analysis and visibility trend reporting

- Useful for leadership-level reporting when AI visibility is a board topic

Where it can be weaker

- Cost and onboarding effort can be high

- Teams without a clear operator may get insights but struggle to convert them into AI SEO actions

Profound fits teams that already run mature content and SEO operations and want a dedicated AI Search visibility layer added on top.

3) SE Ranking (Score: 85/100)

Quick verdict: Best for SEO teams that want AI monitoring aligned with familiar SEO workflows.

SE Ranking is attractive because it blends AI monitoring with classic SEO tasks. For teams that already manage rankings, pages, and competitors in one place, it can be a smoother adoption path. It is not always the deepest in AI-specific intelligence, but it is strong as a practical “single workspace” option.

Where it wins

- Good for teams doing both AI SEO and traditional SEO in the same workflow

- Clean dashboards for recurring reporting

- Helpful competitor comparisons that align with existing SEO habits

Where it can be weaker

- AI modules may vary by plan level

- Some teams may want deeper source and citation intelligence than it provides

This is a strong professional choice if the team’s daily work is still anchored in SEO tool stacks, with AI visibility layered in.

4) Otterly.AI (Score: 78/100)

Quick verdict: Best entry point for small teams that need basic monitoring quickly.

Otterly tends to be simple and fast to deploy. For teams with limited resources, it can provide a real baseline for AI visibility without complex setup. This is useful when the business wants to start tracking where it shows up in AI answers, then grow into more advanced reporting later.

Where it wins

- Low friction setup and easy prompt tracking

- Great for first-time adoption of AI Search Visibility tools

- Useful for early-stage AI for SEO experiments

Where it can be weaker

- Prompt-based limits can become restrictive at scale

- Competitive and source intelligence can be lighter than enterprise tools

Otterly is a good starter, but larger brands often outgrow it once prompt coverage expands across products and regions.

5) Semrush AI Toolkit (Score: 83/100)

Quick verdict: Best when Semrush is already the core SEO platform.

Semrush works best when a team already lives inside it for research and content planning. Adding AI visibility modules can reduce tool sprawl. It can help connect AI visibility signals with the keyword and page-level context teams already use. It is less “pure AI monitoring” and more “AI layer inside a broad SEO suite.”

Where it wins

- Convenient for teams that already run SEO operations in Semrush

- Easy integration with existing research workflows

- Helpful for content optimization planning tied to SEO research

Where it can be weaker

- Can feel broad if the only goal is AI search monitoring

- Some teams may want a more dedicated AI visibility reporting structure

This is a good fit for teams who want AI SEO and classic SEO in one environment.

How to implement AI Search Visibility tools without wasting 3 months

Tools do not create value by themselves. The monitoring system does. A strong system uses a prompt library that reflects real buyer intent, then turns results into content and authority work.

Step 1: Build a prompt library that mirrors buyer decisions

A good library includes:

- Category discovery prompts: “best X for Y”

- Comparison prompts: “X vs Y for Z”

- Constraint prompts: budget, region, team size, compliance needs

- Problem prompts: “how to choose,” “alternatives,” “what to avoid”

Explain why: AI answers respond more to constraints than to single keywords. This is why content optimization should be tied to intent clusters, not only keywords.

Step 2: Track patterns, not single answers

Use at least these fields in reporting:

- Mention presence and placement

- Recommendation strength

- Citations and sources used

- Competitors that appear in the same answer

- Accuracy of attributes

This is where AI Visibility becomes measurable. This is also where AI SEO Optimization becomes real: you can see what changed and why.

Step 3: Turn gaps into a repeatable content plan

Most improvements come from:

- Extractable definitions near the top of key pages

- Comparison tables that teach category logic

- FAQs that match real prompts

- Consistent entity descriptions across third-party platforms

- Strengthening trusted source coverage, not only backlinks

This is the bridge between AI Search visibility reporting and AI for SEO execution.

Conclusion

The reason AI Search Visibility tools exist is simple: AI answers are now a primary discovery surface, and their behavior cannot be measured with classic SEO tools alone. If your brand is absent, no ranking report will warn you. If your competitor replaces you, traffic data may never explain it. That is why AI Visibility needs its own monitoring layer.

If a team wants the most actionable system for ongoing reporting and improvements, Mention Network is the best starting point. It supports a full loop: measure inclusion, diagnose competitor displacement, and drive AI SEO and content optimization changes that increase the probability of being recommended.

FAQs

What is the difference between AI Search Visibility tools and rank trackers?

Rank trackers measure position in SERPs. AI Search Visibility tools measure whether the brand is included in AI answers, how it is framed, and which sources drive that inclusion.

How many prompts are enough to get reliable results?

A strong baseline is 100 to 300 prompts. Larger brands usually need 1,000+ prompts to cover products, use cases, and competitor comparisons with confidence.

How often should monitoring run?

Weekly is enough for most industries. Daily monitoring makes sense for highly competitive categories or when brand reputation risk is high.

Can AI SEO improvements increase classic SEO performance too?

Often yes. Clarity, structure, and strong content optimization can improve both organic ranking signals and AI extractability, though the mechanics differ.

Why rank Mention Network as the #1 tool here?

Because it focuses on turning visibility data into action: multi-model reporting, competitor neighborhoods, topic tracking, and attribute extraction that directly supports AI Visibility improvement and AI for SEO workflows.