What’s New in SEO 2025: AI-Driven Visibility Signals Explained

AI-first discovery is changing what “visibility” means. AI Visibility is not a replacement for classic SEO because it is the layer that helps content earn inclusion in AI answers, AI Mode style experiences, and AI Search surfaces where users get a decision without clicking ten links.

Below is a clean synthesis of the six signals you shared, rewritten into a practical operating playbook with clearer explanations, stronger structure, and more decision-ready takeaways.

December Core Update: Why volatility can look “quiet” but still hit hard

Core Updates often appear calm in broad volatility tools because those tools average changes across many verticals. In practice, the impact concentrates in specific niches, and the biggest swings typically show up where freshness, credibility, and rewriting risk are highest. That is why News tends to move more than stable verticals like Autos and Travel.

What this means for AI SEO

If a niche is volatile, AI surfaces tend to become stricter. When systems are trying to reduce errors, they lean into sources and formats that are easier to verify, summarize, and cite. That directly affects whether pages are included in AI answers.

A better way to diagnose change than “rank up or down”

Instead of looking at one overall graph, break the site into slices so each metric has meaning.

- Slice by page type

News pages behave differently from evergreen guides and product pages. If News drops but evergreen holds, the problem is not “sitewide SEO,” it is usually freshness signals or trust signals. - Slice by intent

Fresh intent queries like “today,” “latest,” and “breaking” react differently than evergreen “how to” queries. LLMs are also more cautious on fresh topics. - Slice by feature visibility

Even if rankings stay stable, click curves can change if AI answers or richer SERP features absorb attention.

Here is a simple diagnostic table that ties each symptom to a likely cause and next action:

| Symptom | What it usually indicates | What to check next |

|---|---|---|

| Impressions drop mainly on News URLs | Freshness and trust recalibration | publication cadence, author pages, citations, update timestamps |

| Impressions stable but clicks drop | SERP feature displacement | AI answers, snippets, PAA presence, title intent mismatch |

| Rankings stable but engagement drops | intent mismatch or thin value | first paragraph clarity, structure, examples, page speed |

| Crawling slows down | crawl prioritization shift | server logs, response times, crawl budget waste, internal linking |

This is the core of AI SEO thinking: “Where did visibility shift in the journey?” not just “Did a keyword move.”

Google Year in Search 2025: Turning trend data into content that AI can reuse

Year in Search is useful because it reflects real user curiosity at scale. For marketers, the best use is not writing “trend recap” posts. The best use is converting trend topics into decision formats AI can lift into answers.

How to convert a trend topic into an AI-friendly content plan

Use a repeatable mapping method so every trend becomes an “answer asset,” not a vague blog post.

- Define the entity in one sentence

A strong definition is the smallest unit that AI systems can reuse safely. - Map the decision intent

Most trend queries fall into these buckets:

- “What is it?” (explain and clarify)

- “What should I choose?” (compare)

- “How do I do it?” (steps, constraints, outcomes)

- Add constraints that match how people ask AI

AI queries often include budget, location, timing, skill level, and alternatives. If content never states constraints, AI has less reason to include it.

The “extractability” test for AI SEO

A page is more likely to appear in AI answers when it contains reusable blocks:

- a direct definition

- a short list of criteria

- a comparison table

- an FAQ that matches natural questions

If content is purely narrative or purely promotional, it becomes harder for models to cite without distortion.

Google’s guidance on “SEO for AI”

The practical advice is simple: do not abandon fundamentals. High-quality content and sound SEO practices remain the foundation because AI systems still need discoverable, indexable, credible source material.

Where AI SEO adds value instead of replacing SEO

Classic SEO answers: “Can users find the page in search results?”

AI SEO answers: “Will AI choose the page for a synthesized answer?”

That difference creates a common failure mode: a brand can rank well yet rarely appear in AI answers if the content is difficult to summarize or lacks clear, quotable statements.

A clean way to explain this to stakeholders

Use a two-layer model:

- Layer 1: Retrieval layer (classic SEO)

Indexing, crawlability, internal linking, speed, topical coverage. Without this, AI systems do not reliably discover and trust the content. - Layer 2: Selection layer (AI SEO)

Clarity, structure, originality, citations, and evidence density. This determines inclusion in AI answers and AI Search surfaces.

If a team optimizes only Layer 1, they can “win rankings” and still lose AI inclusion.

The 5-factor checklist for AI Mode inclusion

This checklist is useful because it is operational. It is not abstract. Each factor can be tested on-page and improved quickly.

Factor 1: Directly answers the question

LLMs prefer content that resolves intent without forcing interpretation. This is why the first 2 to 3 sentences under a heading matter more than the rest of the paragraph. Practical implementation:

- Put a direct answer immediately under each major heading

- Avoid opening with generic marketing context

- State the conclusion first, then explain

Factor 2: High content quality

Quality in AI contexts looks like “reasoning + evidence,” not length. Pages that only reword other pages struggle because they add no unique value for AI to cite. A strong quality block often includes:

- a claim

- a reason

- an example or data point

- a limitation or boundary

Factor 3: Speed and mobile experience

When a page is slow or unstable on mobile, two things happen at the same time.

First, classic SEO suffers because Google’s page experience signals (Core Web Vitals) are strongly tied to mobile usability. If users bounce quickly, scroll less, or rage-tap because the page jumps around, Google reads that as a low-quality experience. Even if the content is good, the page becomes less competitive in rankings and in “enhanced” SERP features.

Second, AI surfaces can deprioritize the page indirectly. AI answers do not just pick content because it exists. They prefer sources that are easy to retrieve, fast to load, and consistently accessible. If your pages are slow, heavy, or frequently fail on mobile, the system that fetches and evaluates sources may treat your site as unreliable. That reduces the chance your content becomes part of AI summaries, citations, or recommended sources.

Factor 4: Originality

Originality is not “being creative.” It is having something that cannot be copied from everyone else, such as:

- firsthand tests

- benchmarks

- screenshots or logs

- unique frameworks used consistently

Factor 5: Clear citations

Citations are a trust amplifier, especially for sensitive topics. AI systems reduce risk by leaning on sources that look verifiable.

Here is a practical scoring table teams can use during publishing reviews:

| AI Mode factor | What “good” looks like | Fast fix if missing |

|---|---|---|

| Direct answer | 2 to 3 sentence BLUF under each H2 | rewrite intros to answer first |

| Quality | claim + evidence + example | add one concrete example per section |

| Speed | stable mobile load experience | compress images, reduce scripts, cache |

| Originality | unique data or POV | add a small benchmark, test, or case |

| Citations | sources linked near the claim | cite 1 to 2 authoritative sources per key claim |

This table works because it translates AI SEO into publishing behavior, not theory.

Keyword stuffing: Why “perfect density” is the wrong question

There is no universal formula for repeating a keyword in a 2,000-word page that guarantees success. In AI contexts, repetition without added meaning can reduce clarity and credibility.

A safer optimization approach than counting repetitions

Replace “keyword density” thinking with three checks:

- Meaning density

Every time the keyword appears, does the surrounding sentence add new information, or is it filler? - Entity completeness

Are the key entities and attributes present? For product content, that means specs, constraints, comparisons, and real use cases. - Extractability

Can an AI system lift a paragraph without needing to rewrite it heavily? If not, the paragraph is not a good candidate for inclusion.

AI Visibility improves when keywords support structure and meaning, not when they are used as a mechanical tactic.

Publisher traffic drop: Why rankings can still look fine while visibility collapses

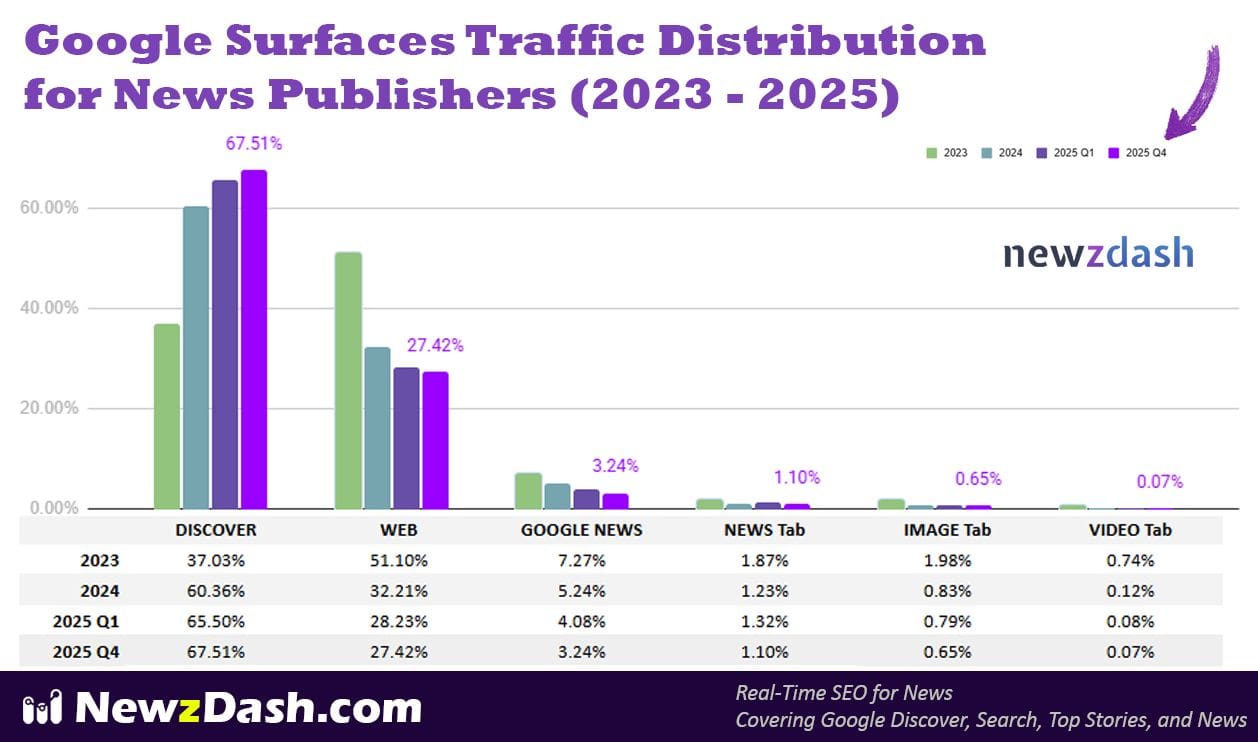

The data you provided describes a major shift: Google Web Search traffic share to news publishers dropping from 51% to 27% over two years, while Discover becomes a much larger dependency, cited at 67.5% versus 37% two years ago.

What this implies for AI SEO

Two important effects show up:

- Click-based success metrics become misleading

If AI answers satisfy intent without clicks, rankings might not translate into traffic the way they used to. - Distribution concentrates into fewer surfaces

When more attention flows through Discover or AI summaries, publishers and brands become more dependent on platform selection behavior, not just ranking behavior.

A simple visual helps stakeholders “feel” the shift:

| Surface | Earlier share | Recent share | Direction |

|---|---|---|---|

| Google Web Search to publishers | 51% | 27% | Down |

| Google Discover share | 37% | 67.5% | Up |

What teams should measure instead of only traffic

AI Visibility SEO needs visibility metrics that reflect inclusion, not just clicks:

- mention frequency in AI answers

- citation presence

- competitor substitution patterns

- topic-level share of voice

If the funnel is compressed, “being included” becomes the real gating event.

How to operationalize AI SEO without breaking classic SEO

The best teams do not run AI SEO as a separate experiment. They bake it into the same operating rhythm as classic SEO: planning, production, publishing, and technical QA. The goal is simple: protect rankings while increasing the probability of inclusion in AI answers. That only works when AI SEO has owners, a repeatable workflow, and reporting that connects AI outcomes back to specific pages, updates, and competitors.

Build a Two-Layer Dashboard (One Source of Truth)

Layer 1 stays focused on classic SEO health and demand capture. Layer 2 measures whether AI systems are selecting and citing the brand in AI answers.

Layer 1: Classic SEO (foundation metrics)

- Indexing coverage: % of key URLs indexed, crawl errors, canonical issues

- Core Web Vitals: mobile LCP, INP, CLS trends for money pages

- Query groups: non-branded vs branded, informational vs commercial clusters

- Page types: category, product, blog, comparison, FAQ performance split

- Internal linking: hub coverage, orphan pages, anchor consistency

Layer 2: AI SEO (selection metrics)

- AI answer mentions: how often the brand is mentioned for tracked prompts

- Citation sources: which domains AI uses when it talks about the category

- Topic coverage gaps: prompts where competitors appear but the brand does not

- Competitor presence: which rivals co-appear, and who gets “top 3” inclusion most often

A simple rule helps keep this clean: Layer 1 tells the team whether pages can compete. Layer 2 tells the team whether LLMs actually choose them.

Use a Weekly Workflow That Avoids Noise

AI answers fluctuate, so the workflow must measure patterns, not screenshots. The point is to reduce randomness and make changes explainable.

Step 1: Lock a stable prompt set

Pick 30 to 80 prompts that represent the business, not vanity topics:

- “Best [category] for [use case]”

- “[category] for [constraint: budget, size, region]”

- “[brand] vs [competitor] for [scenario]”

Keep this set stable for at least 4 to 6 weeks so changes reflect reality, not prompt drift.

Step 2: Run on schedule, same conditions

Run prompts weekly (or twice a week in volatile categories), keeping variables consistent:

- Same prompt wording

- Same geo or market

- Same model set (ChatGPT, Gemini, Perplexity, etc.)

This turns AI SEO into a measurable system instead of a collection of anecdotes.

Step 3: Track trends, then diagnose causes

Instead of reacting to a single drop, watch for:

- 3-week moving averages of mention rate

- new citation sources entering the mix

- competitor replacing the brand on a topic cluster

Then tie the changes back to what actually moved:

- page updates (copy, structure, schema)

- technical shifts (speed regressions, indexing issues)

- competitor launches (new comparisons, PR, new third-party mentions)

Turn Insights Into Repeatable Improvements

Once the dashboard and workflow are stable, each week should end with one clear decision:

- which pages to refresh for AI extractability

- which gaps to fill with comparison/FAQ content

- which third-party validation sources to pursue based on citation patterns

That is the difference between “tracking AI” and operational AI SEO: visibility becomes testable, attributable, and improvable without sacrificing classic SEO performance.

Conclusion

AI SEO is the discipline of staying visible when discovery shifts from links to answers. Core Updates still matter, trend data still drives demand, and classic SEO still provides the retrieval foundation.

The difference is that inclusion now depends on how clearly content can be selected, summarized, and trusted. Teams that keep measuring only rankings and clicks will miss the real shift: visibility is moving upstream into AI answers and AI Search surfaces where the decision often happens before the click.

FAQs

Can a page rank #1 and still not show up in AI answers?

Yes. High ranking does not guarantee AI inclusion if the page is hard to summarize, lacks direct answers, or lacks evidence and citations.

How often should teams review AI SEO performance?

Weekly is a practical default for most brands, with more frequent checks during launches, crises, or major algorithm changes.

Do citations really matter for AI inclusion?

They often help because citations reduce risk. AI systems are more comfortable reusing content that appears verifiable, especially in high-stakes topics.

What is the fastest on-page change that improves AI Visibility SEO?

Rewrite section intros to answer the heading question immediately, then add one concrete example or data point that makes the answer safe to reuse.